loading, partitioning, and querying parquet data in kdb

Published 21 hours ago • 25 plays • Length 9:50Download video MP4

Download video MP3

Similar videos

-

9:49

9:49

intro to kdb | loading data & ipc | publishing & subscribing

-

5:16

5:16

an introduction to apache parquet

-

4:32

4:32

query and load externally partitioned parquet data from bigquery

-

11:28

11:28

parquet file format - explained to a 5 year old!

-

4:02

4:02

querying parquet files on s3 with duckdb

-

8:02

8:02

what is apache parquet file?

-

5:31

5:31

row groups in apache parquet

-

59:31

59:31

data lake fundamentals, apache iceberg and parquet in 60 minutes on dataexpert.io

-

41:39

41:39

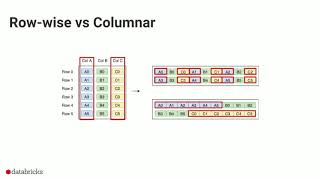

the columnar roadmap: apache parquet and apache arrow

-

55:27

55:27

gábor szárnyas - duckdb: the power of a data warehouse in your python process

-

40:46

40:46

the parquet format and performance optimization opportunities boudewijn braams (databricks)

-

4:19

4:19

using duckdb to analyze the data quality of apache parquet files

-

4:21

4:21

reading parquet files in python

-

4:14

4:14

how primary keys work in clickhouse

-

2:43

2:43

load parquet file from s3 using the ui

-

12:54

12:54

this incredible trick will speed up your data processes.

-

7:10

7:10

row group size in parquet: not too big, not too small

-

4:49

4:49

exporting csv files to parquet with pandas, polars, and duckdb

-

2:45

2:45

using duckdb to diff apache parquet schemas

-

8:00

8:00

how to query and ingest parquet files with clickhouse