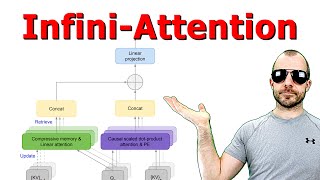

new: infini attention w/ 1 mio context length

Published 6 months ago • 2K plays • Length 21:23Download video MP4

Download video MP3

Similar videos

-

24:34

24:34

ring attention explained: 1 mio context length

-

37:17

37:17

leave no context behind: efficient infinite context transformers with infini-attention

-

37:21

37:21

genai leave no context efficient infini context transformers w infini attention

-

6:03

6:03

efficient infinite context transformers with infini-attention | large language models (llms)

-

1:05

1:05

google's infini-attention: infinite context in language models 🤯 #ai #googleai #nlp

-

24:52

24:52

mighty new transformerfam (feedback attention mem)

-

14:02

14:02

ai research radar | groundhog | efficient infinite context transformers with infini-attention | goex

-

2:01

2:01

this video is not in reverse.

-

24:44

24:44

mathematician explains infinity in 5 levels of difficulty | wired

-

8:07

8:07

differential transformer explained in simple words

-

30:58

30:58

next-gen ai: recurrentgemma (long context length)

-

14:55

14:55

new by google: michelangelo (1 mio token reasoning)

-

58:30

58:30

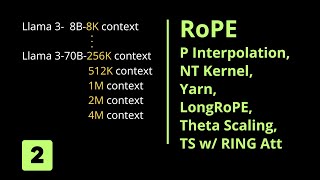

longrope & theta scaling to 1 mio token (2/2)

-

0:21

0:21

google unveils gemini pro 1.5 with 1 million token context length | genai news cw7 #aigenerated

-

23:16

23:16

infini-attention: google's solution for infinite memory in llms - the ai paper club podcast

-

18:48

18:48

new: unlimited token length for llms by microsoft (longnet explained)

-

1:05:11

1:05:11

infini-attention and am-radio

-

26:40

26:40

leave no context behind: efficient infinite context transformers with infini-attention

-

6:22

6:22

leave no context behind-efficient infinite context transformers - audio podcast

-

5:09

5:09

discover the magic of ai: how llms write like humans!

-

![[audio notes] flashattention: fast and memory-efficient exact attention with io-awareness](https://i.ytimg.com/vi/z28H3Ro764Q/mqdefault.jpg) 26:28

26:28

[audio notes] flashattention: fast and memory-efficient exact attention with io-awareness