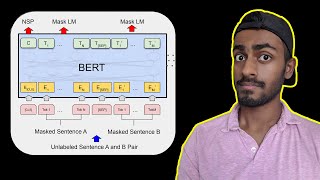

next sequence prediction (nsp) in bert pretraining explained

Published 2 years ago • 1.5K plays • Length 3:46Download video MP4

Download video MP3

Similar videos

-

6:12

6:12

masked language modeling (mlm) in bert pretraining explained

-

13:43

13:43

training bert #3 - next sentence prediction (nsp)

-

20:14

20:14

bert model in nlp explained

-

0:51

0:51

bert networks in 60 seconds

-

36:45

36:45

training bert #4 - train with next sentence prediction (nsp)

-

8:52

8:52

albert (bert) model in nlp explained

-

31:56

31:56

sentiment analysis with bert neural network and python

-

1:56:20

1:56:20

let's build gpt: from scratch, in code, spelled out.

-

9:24

9:24

linear interpolation in excel | fill in missing values

-

2:38

2:38

roberta model (bert) in nlp explained

-

1:00

1:00

bert vs gpt

-

0:42

0:42

defining an lstm neural network for time series forecasting in pytorch #shorts

-

2:47

2:47

electra model (bert) in nlp explained

-

0:50

0:50

what is bert

-

0:35

0:35

preparing a time series dataset for supervised learning forecasting #shorts

-

23:03

23:03

what is bert? | deep learning tutorial 46 (tensorflow, keras & python)

-

11:37

11:37

bert neural network - explained!

-

0:48

0:48

why bert and gpt over transformers

-

40:50

40:50

nlp | solving #nlp problems #bert #ngram #self-attention #transformer

-

14:21

14:21

spanbert: improving pre-training by representing and predicting spans (research paper walkthrough)

-

9:11

9:11

transformers, explained: understand the model behind gpt, bert, and t5