towards monosemanticity explained

Published 5 months ago • 690 plays • Length 1:09:42

Download video MP4

Download video MP3

Similar videos

-

43:40

43:40

towards monosemanticity: decomposing language models into understandable components

-

3:52

3:52

explained: optogenetics

-

15:01

15:01

illustrated guide to transformers neural network: a step by step explanation

-

8:38

8:38

the rise of humanoid robots: is humanity ready for synthetic humans?

-

18:08

18:08

transformer neural networks derived from scratch

-

18:21

18:21

query, key and value matrix for attention mechanisms in large language models

-

1:11:41

1:11:41

stanford cs25: v2 i introduction to transformers w/ andrej karpathy

-

9:11

9:11

transformers, explained: understand the model behind gpt, bert, and t5

-

5:34

5:34

attention mechanism: overview

-

1:56:20

1:56:20

let's build gpt: from scratch, in code, spelled out.

-

2:25

2:25

how to use dynamic region-growing in mimics | mimics innovation suite | materialise medical

-

13:05

13:05

transformer neural networks - explained! (attention is all you need)

-

58:04

58:04

attention is all you need (transformer) - model explanation (including math), inference and training

-

29:46

29:46

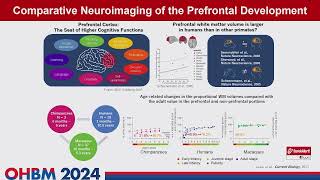

ohbm 2024 | educational course | an introduction to cross-species comparative neuroimaging | part 1

-

8:38

8:38

transformers: the best idea in ai | andrej karpathy and lex fridman

-

8:33

8:33

ohbm 2022 | 142 | talk | xiaoxin hao | the neurocognitive mechanism of processing embedded structu…

-

6:45

6:45

interoperability strategic roadmap

-

3:19

3:19

ittap24. konovalenko, maruschakcomputer assisted analysis of transgranular and intergranular microme

-

30:27

30:27

ohbm 2024 | educational course | an introduction to cross-species comparative neuroimaging | part 5

-

12:26

12:26

rasa algorithm whiteboard - transformers & attention 2: keys, values, queries

-

17:44

17:44

ohbm 2024 | symposium | tomoko sakai | development of an integrative eco-platform for knowledge-d…

Clip.africa.com - Privacy-policy

43:40

43:40

3:52

3:52

15:01

15:01

8:38

8:38

18:08

18:08

18:21

18:21

1:11:41

1:11:41

9:11

9:11

5:34

5:34

1:56:20

1:56:20

2:25

2:25

13:05

13:05

58:04

58:04

29:46

29:46

8:38

8:38

8:33

8:33

6:45

6:45

3:19

3:19

30:27

30:27

12:26

12:26

17:44

17:44